"The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge." — Stephen Hawking

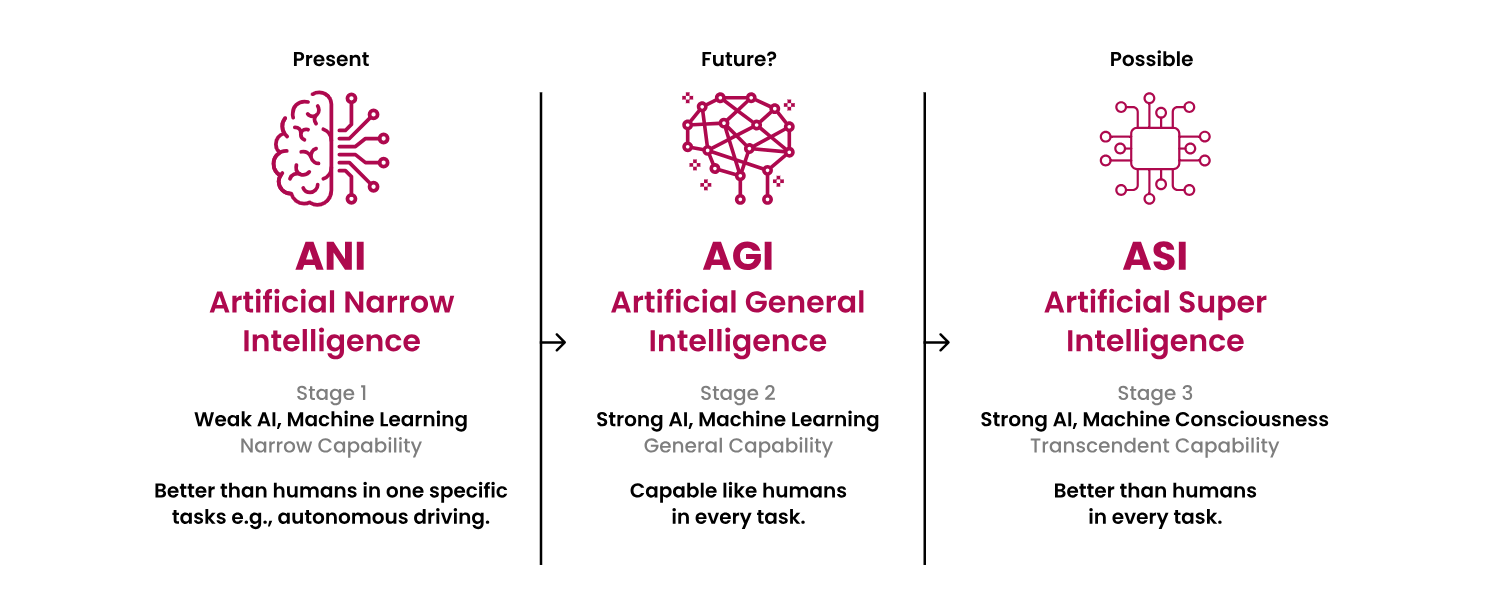

We are likely not going to be seeing AGI by 2030, and certainly not through LLMs.

AGI is not just a computer science/mathematics problem; it's also a biology, and a physics problem. There is so much hype in the AI space— when you look past the noise, we have barely tapped the surface.

We're not going to LLM our way to AGI; that's probably never going to happen. AI companies will obviously tell you that because they benefit from that narrative (hype drives usage, investment, and dominance in the game). A few points I'll make:

1) LLMs are not grounded in physics; they are not embodied; nor are they irrational like humans are. All 3 are core to why humans are intelligent. Human intelligence is wanting to knock over a cyclist because he one of 100 cyclists blocking your way, then going to X/Twitter to say ALL cyclists are bad. The best breakthroughs are often built on top of irrationality, things that are 'wrong' from an optimisation perspective. Counter-intuitively, that's what human intelligence is, it's not a straightforward optimisation problem.

2) Hallucinations in AI are seen as a bad thing, but hallucinations in AI is what I think will lead to novelty and the kind of generalisation we want. Intelligence of often 'hallucination' of what you think the truth is and building on top of that (cyclist example). Or thinking that you trust your partner with 100% certainty and forming beliefs based off of that 'hallucination'— as a result you get 2 humans who can connect deeply and have conversations that are irrational, yet emotionally connected.

3) Many researchers say AGI is just reinforcement learning (RL) + unsupervised learning (UL). I think it's incomplete. AGI is RL, UL, Predicitve processing, and Embodied Cognition.

4) It's also worth noting that while many AI breakthroughs seem innovative within computer science, they often mirror mechanisms long understood in biology. Most algorithms don't fundamentally reshape how we understand cognition — they externalise it. A lot of AI researchers are just not informed in biology so they get mistaken. AI is less about inventing new forms of intelligence, and more about replicating biological cognition in artificial systems. This does not mean that the AI revolution has not triggered existential questions, because it has— it also makes us think about whether there are constraits in biological systems that can be applied to artificial (currently discrete) systems.

Don't get me wrong, many industries will be (and are being) fundamentally revolutionised— and AI does not need to be AGI to pose an existential risk.